Stopthatgirl7

Hey, y’all! Just another random, loudmouthed, opinionated, Southern-fried nerdy American living abroad.

This is my lemmy account because I got sick of how unstable kbin dot social was.

Mastodon: @stopthatgirl7

- 84 Posts

- 6 Comments

Joined 7M ago

Cake day: Mar 07, 2024

You are not logged in. If you use a Fediverse account that is able to follow users, you can follow this user.

English

English- •

- www.404media.co

- •

- 14h

- •

In June, the U.S. National Archives and Records Administration (NARA) gave employees a presentation and tech demo called “AI-mazing Tech-venture” in which Google’s Gemini AI was presented as a tool archives employees could use to “enhance productivity.” During a demo, the AI was queried with questions about the John F. Kennedy assassination, according to a [*copy of the presentation*](https://cdn.muckrock.com/foia_files/2024/09/19/2024_Information_Services_Town_Hall_Presentation_Redacted.pdf?ref=404media.co) obtained by 404 Media using a public records request.

In December, NARA plans to launch a public-facing AI-powered chatbot called “Archie AI,” 404 Media has learned. “The National Archives has big plans for AI,” a NARA spokesperson told 404 Media. It’s going to be essential to how we conduct our work, how we scale our services for Americans who want to be able to access our records from anywhere, anytime, and how we ensure that we are ready to care for the records being created today and in the future.”

[*Employee chat logs*](https://cdn.muckrock.com/foia_files/2024/09/19/EVT506703_Chat_Log.docx_Redacted.pdf?ref=404media.co) given during the presentation show that National Archives employees are concerned about the idea that AI tools will be used in archiving, a practice that is inherently concerned with accurately recording history.

One worker who attended the presentation told 404 Media “I suspect they're going to introduce it to the workplace. I'm just a person who works there and hates AI bullshit.”

English

English- •

- futurism.com

- •

- 15d

- •

A former jockey who was left paralyzed from the waist down after a horse riding accident was able to walk again thanks to a cutting-edge piece of robotic tech: a $100,000 ReWalk Personal exoskeleton.

When one of its small parts malfunctioned, however, the entire device stopped working. Desperate to gain his mobility back, he reached out to the manufacturer, Lifeward, for repairs. But it turned him away, claiming his exoskeleton was too old, [*404 media *reports](https://www.404media.co/paralyzed-jockey-loses-ability-to-walk-after-manufacturer-refuses-to-fix-battery-for-his-100-000-exoskeleton/).

"After 371,091 steps my exoskeleton is being retired after 10 years of unbelievable physical therapy," Michael Straight [posted on Facebook](https://www.facebook.com/mj.straight.9/posts/pfbid08WJkmwePBXPXrktEKT1PpbTutQkysBh8nRoAy6dC1SSZKAe5Ti9q4ETYg7fHC5hDl) earlier this month. "The reasons why it has stopped is a pathetic excuse for a bad company to try and make more money."

English

English- •

- www.ftc.gov

- •

- 17d

- •

The Federal Trade Commission is taking action against multiple companies that have relied on artificial intelligence as a way to supercharge deceptive or unfair conduct that harms consumers, as part of its new law enforcement sweep called Operation AI Comply.

The cases being announced today include actions against a company promoting an AI tool that enabled its customers to create fake reviews, a company claiming to sell “AI Lawyer” services, and multiple companies claiming that they could use AI to help consumers make money through online storefronts.

“Using AI tools to trick, mislead, or defraud people is illegal,” said FTC Chair Lina M. Khan. “The FTC’s enforcement actions make clear that there is no AI exemption from the laws on the books. By cracking down on unfair or deceptive practices in these markets, FTC is ensuring that honest businesses and innovators can get a fair shot and consumers are being protected.”

English

English- •

- arstechnica.com

- •

- 19d

- •

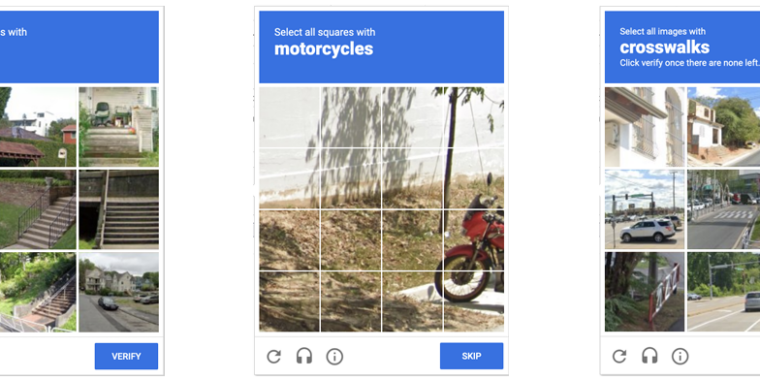

Anyone who has been surfing the web for a while is probably used to clicking through a CAPTCHA grid of street images, identifying everyday objects to prove that they're a human and not an automated bot. Now, though, new research claims that locally run bots using specially trained image-recognition models can match human-level performance in this style of CAPTCHA, achieving a 100 percent success rate despite being decidedly not human.

ETH Zurich PhD student Andreas Plesner and his colleagues' new research, [available as a pre-print paper](https://arxiv.org/abs/2409.08831), focuses on Google's ReCAPTCHA v2, which challenges users to identify which street images in a grid contain items like bicycles, crosswalks, mountains, stairs, or traffic lights. Google [began phasing that system out years ago](https://arstechnica.com/gadgets/2017/03/googles-recaptcha-announces-invisible-background-captchas/) in favor of an "invisible" reCAPTCHA v3 that analyzes user interactions rather than offering an explicit challenge.

Despite this, the older reCAPTCHA v2 is [still used by millions of websites](https://trends.builtwith.com/widgets/reCAPTCHA-v2). And even sites that use the updated reCAPTCHA v3 will sometimes [use reCAPTCHA v2 as a fallback](https://stackoverflow.com/questions/54215482/combining-recaptcha-v2-and-v3/63344009#63344009) when the updated system gives a user a low "human" confidence rating.

English

English- •

- www.niemanlab.org

- •

- 23d

- •

When German journalist [Martin Bernklau](https://www.linkedin.com/in/martin-bernklau-253aa0276/)typed his name and location into [Microsoft’s Copilot](https://copilot.microsoft.com/) to see how his articles would be picked up by the chatbot, the answers [horrified him](https://www.theregister.com/2024/08/26/microsoft_bing_copilot_ai_halluciation/). Copilot’s results asserted that Bernklau was an escapee from a psychiatric institution, a convicted child abuser, and a conman preying on widowers. For years, Bernklau had served as a courts reporter and the AI chatbot had [falsely blamed him](https://www.swr.de/swraktuell/baden-wuerttemberg/tuebingen/ki-macht-tuebinger-journalist-zum-kinderschaender-100.html) for the crimes whose trials he had covered.

The accusations against Bernklau weren’t true, of course, and are examples of generative AI’s [“hallucinations.”](https://www.ibm.com/topics/ai-hallucinations) These are inaccurate or nonsensical responses to a prompt provided by the user, and they’re [alarmingly common](https://www.scientificamerican.com/article/chatbot-hallucinations-inevitable/). Anyone attempting to use AI should always proceed with great caution, because information from such systems needs validation and verification by humans before it can be trusted.

But why did Copilot hallucinate these terrible and false accusations?

English

English- •

- www.404media.co

- •

- 1M

- •

The creator of an open source project that scraped the internet to determine the ever-changing popularity of different words in human language usage says that they are [*sunsetting the project*](https://github.com/rspeer/wordfreq/blob/master/SUNSET.md?ref=404media.co) because generative AI spam has poisoned the internet to a level where the project no longer has any utility.

Wordfreq is a program that tracked the ever-changing ways people used more than 40 different languages by analyzing millions of sources across Wikipedia, movie and TV subtitles, news articles, books, websites, Twitter, and Reddit. The system could be used to analyze changing language habits as slang and popular culture changed and language evolved, and was a resource for academics who study such things. In a [*note on the project’s*](https://github.com/rspeer/wordfreq/blob/master/SUNSET.md?ref=404media.co) GitHub, creator Robyn Speer wrote that the project “will not be updated anymore.”

English

English- •

- www.wired.com

- •

- 1M

- •

Eli Collins, a vice president of product management at [Google DeepMind](https://www.wired.com/tag/deepmind/), first demoed generative AI video tools for the company’s board of directors back in 2022. Despite the model’s slow speed, pricey cost to operate, and sometimes off-kilter outputs, he says it was an eye-opening moment for them to see fresh video clips generated from a random prompt.

Now, just a few years later, Google has [announced plans](https://blog.google/products/youtube/made-on-youtube-2024/) for a tool inside of the YouTube app that will allow anyone to generate AI video clips, using [the company’s Veo model](https://arstechnica.com/information-technology/2024/05/google-unveils-veo-a-high-definition-ai-video-generator-that-may-rival-sora/), and directly post them as part of [YouTube Shorts](https://www.wired.com/story/youtube-shorts-challenges-tiktok-with-music-making-ai-for-creators/). “Looking forward to 2025, we're going to let users create stand-alone video clips and shorts,” says Sarah Ali, a senior director of product management at YouTube. “They're going to be able to generate six-second videos from an open text prompt.” Ali says the update could help creators hunting for footage to fill out a video or trying to envision something fantastical. She is adamant that the Veo AI tool is not meant to [replace creativity](https://www.wired.com/story/picture-limitless-creativity-ai-image-generators/), but augment it.

English

English- •

- www.404media.co

- •

- 1M

- •

Snapchat is reserving the right to put its users’ faces in ads, according to terms of service related to its “My Selfie” tool (formerly “AI Selfies”), which allows users and their friends to create AI-generated images trained on their selfies.

Users have the option to opt out of this by toggling off a “feature” in the app called “See My Selfie in Ads,” but according to 404 Media’s testing this feature is on by default.

English

English- •

- apnews.com

- •

- 1M

- •

California Gov. Gavin Newsom signed three bills Tuesday to crack down on the use of artificial intelligence to create [false images or videos in political ads](https://apnews.com/article/california-ai-election-deepfakes-safety-regulations-eb6bbc80e346744dbb250f931ebca9f3) ahead of the 2024 election.

A new law, set to take effect immediately, makes it illegal to create and publish deepfakes related to elections 120 days before Election Day and 60 days thereafter. It also allows courts to stop distribution of the materials and impose civil penalties.

“Safeguarding the integrity of elections is essential to democracy, and it’s critical that we ensure AI is not deployed to undermine the public’s trust through disinformation -– especially in today’s fraught political climate,” Newsom said in a statement. “These measures will help to combat the harmful use of deepfakes in political ads and other content, one of several areas in which the state is being proactive to foster transparent and trustworthy AI.”

English

English- •

- www.404media.co

- •

- 1M

- •

The first thing people saw when they searched Google for the artist Hieronymus Bosch was an AI-generated version of his *Garden of Earthly Delights*, one of the most famous paintings in art history.

Depending on what they are searching for, Google Search sometimes serves users a series of images above the list of links they usually see in results. As first spotted by a user on [*Twitter*](https://x.com/ItsTheTalia/status/1835092917418889710?ref=404media.co), when people searched for “Hieronymus Bosch” on Google, it included a couple of images from the real painting, but the first and largest image they saw was an AI-generated version of it.

English

English- •

- www.pcgamer.com

- •

- 1M

- •

Getting your game noticed is a tricky business when you have to punch through the noise of the [more than 10,000 new Steam games](https://steamdb.info/stats/releases/) releasing each year. Young Horses, the developer of Bugsnax and Octodad, have found itself in an even trickier spot: Thanks to Google, people are expecting a Bugsnax sequel that doesn't exist.

"We are not working on a Bugsnax sequel right now and I need AI bs to stop telling kids we are based on a wiki ideas fanfic," Young Horses co-founder and president [Philip Tibitoski tweeted](https://x.com/PTibz/status/1834555767220732011) earlier today. It turns out, through the wonders of algorithmic search result curation, Google's featured snippets have been informing people that Bugsnax 2 will be releasing in October 2024, despite the fact that neither Young Horses or any other developer are making it.

English

English- •

- futurism.com

- •

- 1M

- •

An alleged scammer has been arrested under suspicion that he used AI to create a wild number of fake bands — and fake music to go with them — and faking untold streams with more bots to earn millions in ill-gotten revenue.

In a [press release](https://www.justice.gov/usao-sdny/pr/north-carolina-musician-charged-music-streaming-fraud-aided-artificial-intelligence), the Department of Justice announced that investigators have arrested 52-year-old North Carolina man Michael Smith, who has been charged with a purportedly seven-year scheme that involved using his real-life music skills to make more than $10 million in royalties.

Indicted on three counts involving money laundering and wire fraud, the Charlotte-area man faces a maximum of 20 years per charge.

English

English- •

- www.dexerto.com

- •

- 1M

- •

A media firm that has worked with the likes of Google and Meta has admitted that it can target adverts based on what you said out loud near device microphones.

Media conglomerate Cox Media Group (CMG) has been pitching tech companies on a new targeted advertising tool that uses audio recordings collected from smart home devices, according to a [404 Media investigation](https://www.404media.co/heres-the-pitch-deck-for-active-listening-ad-targeting/). The company is partners with Facebook, Google, Amazon, and Bing.

In a pitch deck presented to [Google](https://www.dexerto.com/tag/google/), [Facebook](https://www.dexerto.com/tag/facebook/), and others in November 2023, CMG referred to the technology used for monitoring and active listening as “Voice Data.” The firm also mentioned using artificial intelligence to collect data about consumers’ online behavior.

English

English- •

- www.crikey.com.au

- •

- 1M

- •

Artificial intelligence is worse than humans in every way at summarising documents and might actually create additional work for people, a government trial of the technology has found.

Amazon conducted the test earlier this year for Australia’s corporate regulator the Securities and Investments Commission (ASIC) using submissions made to an inquiry. The outcome of the trial was revealed in an [answer](https://www.aph.gov.au/DocumentStore.ashx?id=b4fd6043-6626-4cbe-b8ee-a5c7319e94a0) to a questions on notice at the Senate select committee on adopting artificial intelligence.

The test involved testing generative AI models before selecting one to ingest five submissions from a parliamentary inquiry into audit and consultancy firms. The most promising model, Meta’s open source model Llama2-70B, was prompted to summarise the submissions with a focus on ASIC mentions, recommendations, references to more regulation, and to include the page references and context.

Ten ASIC staff, of varying levels of seniority, were also given the same task with similar prompts. Then, a group of reviewers blindly assessed the summaries produced by both humans and AI for coherency, length, ASIC references, regulation references and for identifying recommendations. They were unaware that this exercise involved AI at all.

These reviewers overwhelmingly found that the human summaries beat out their AI competitors on every criteria and on every submission, scoring an 81% on an internal rubric compared with the machine’s 47%.

English

English- •

- www.theverge.com

- •

- 1M

- •

The price of some Canva subscriptions are set to skyrocket next year following the company’s aggressive rollout of generative AI features. Global customers for Canva Teams — a business-orientated subscription that supports adding multiple users — can expect prices to increase by just over 300 percent in some instances. Canva says the increase is justified due to the “expanded product experience” and value that generative AI tools have added to the platform.

In the US, some [Canva Teams users are reporting](https://x.com/wlsndrs/status/1829521548233122098) subscription increases from $120 per year for up to five users, to an eye-watering $500 per year. A 40 percent discount will be applied to bring that down to $300 for the first 12 months. In Australia, the flat $39.99 AUS (about $26 USD) per month fee for five users is switching to $13.50 AUS (about $9 USD) for each user. That means a team of five will pay at least 68 percent more, not withstanding any other discounts.

English

English- •

- hechingerreport.org

- •

- 1M

- •

Does AI actually help students learn? A recent experiment in a high school provides a cautionary tale.

Researchers at the University of Pennsylvania found that Turkish high school students who had access to ChatGPT while doing practice math problems did worse on a math test compared with students who didn’t have access to ChatGPT. Those with ChatGPT solved 48 percent more of the practice problems correctly, but they ultimately scored 17 percent worse on a test of the topic that the students were learning.

A third group of students had access to a revised version of ChatGPT that functioned more like a tutor. This chatbot was programmed to provide hints without directly divulging the answer. The students who used it did spectacularly better on the practice problems, solving 127 percent more of them correctly compared with students who did their practice work without any high-tech aids. But on a test afterwards, these AI-tutored students did no better. Students who just did their practice problems the old fashioned way — on their own — matched their test scores.

English

English- •

- techcrunch.com

- •

- 2M

- •

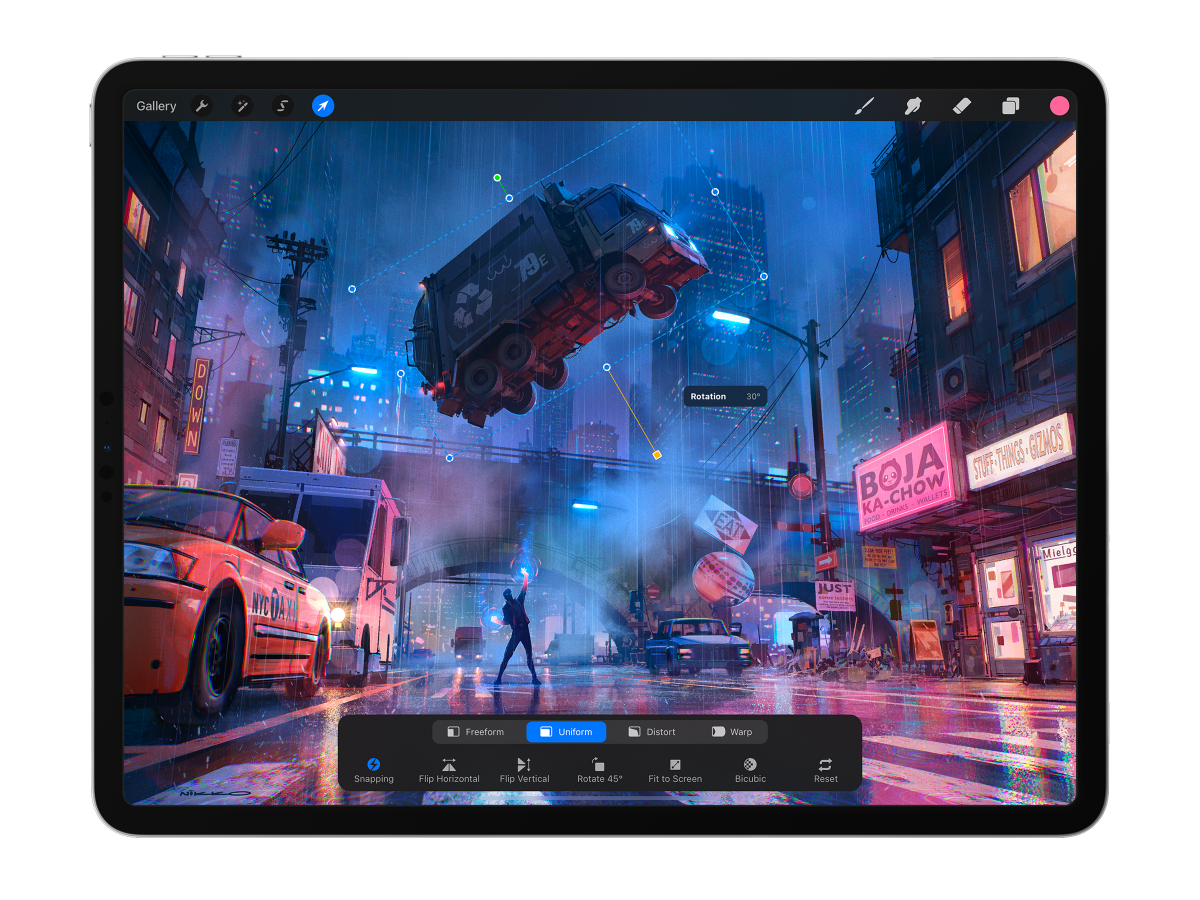

Popular iPad design app Procreate is coming out against generative AI, and has vowed never to introduce generative AI features into its products. The company said on its [website ](https://procreate.com/ai)that although machine learning is a “compelling technology with a lot of merit,” the current path that generative AI is on is wrong for its platform.

Procreate goes on to say that it’s not chasing a technology that is a threat to human creativity, even though this may make the company “seem at risk of being left behind.”

Procreate CEO James Cuda released an even stronger statement against the technology in a video [posted to X ](https://x.com/Procreate/status/1825311104584802470)on Monday.

English

English- •

- www.404media.co

- •

- 2M

- •

My investigation reveals that the AI images we see on Facebook are an evolution of a Facebook spam economy that has existed for years, driven by social media influencers, guides, services, and businesses in places like India, Pakistan, Indonesia, Thailand, and Vietnam, where the payouts generated by this content, which seems marginal by U.S. standards, goes further. The spam comes from a mix of people manually creating images on their phones using off-the-shelf tools like Microsoft’s AI Image Creator to larger operations that use automated software to spam the platform. I also know that their methods work because I used them to flood Facebook with AI slop myself as a test.

English

English- •

- www.404media.co

- •

- 2M

- •

Nvidia scraped videos from Youtube and several other sources to compile training data for its AI products, internal Slack chats, emails, and documents obtained by 404 Media show.

When asked about legal and ethical aspects of using copyrighted content to train an AI model, Nvidia defended its practice as being “in full compliance with the letter and the spirit of copyright law.” Internal conversations at Nvidia viewed by 404 Media show when employees working on the project raised questions about potential legal issues surrounding the use of datasets compiled by academics for research purposes and YouTube videos, managers told them they had clearance to use that content from the highest levels of the company.

English

English- •

- www.nature.com

- •

- 3M

- •

It is now clear that generative artificial intelligence (AI) such as large language models (LLMs) is here to stay and will substantially change the ecosystem of online text and images. Here we consider what may happen to GPT-{*n*} once LLMs contribute much of the text found online. We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear. We refer to this effect as ‘model collapse’ and show that it can occur in LLMs as well as in variational autoencoders (VAEs) and Gaussian mixture models (GMMs). We build theoretical intuition behind the phenomenon and portray its ubiquity among all learned generative models. We demonstrate that it must be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of LLM-generated content in data crawled from the Internet.

English

English- •

- www.forbes.com

- •

- 3M

- •

[The new global study](https://www.upwork.com/hire/landing/?utm_medium=PaidSearch\&utm_source=Bing\&utm_campaign=SEMBrand_Bing_Domestic_Marketplace_Core\&campaignid=506116836\&utm_content=1274335616495213\&utm_keyword=www%20upwork%20com\&matchtype=p\&device=c\&partnerid=5106291ee9fb18bdf26e4f89158b83db\&cq_plac\&ad_id=79646080399393 "https://www.upwork.com/hire/landing/?utm_medium=PaidSearch\&utm_source=Bing\&utm_campaign=SEMBrand_Bing_Domestic_Marketplace_Core\&campaignid=506116836\&utm_content=1274335616495213\&utm_keyword=www%20upwork%20com\&matchtype=p\&device=c\&partnerid=5106291ee9fb18bdf26e4f89158b83db\&cq_plac\&ad_id=79646080399393"), in partnership with The Upwork Research Institute, interviewed 2,500 global C-suite executives, full-time employees and freelancers. Results show that the optimistic expectations about AI's impact are not aligning with the reality faced by many employees. The study identifies a disconnect between the high expectations of managers and the actual experiences of employees using AI.

Despite 96% of C-suite executives expecting AI to boost productivity, the study reveals that, 77% of employees using AI say it has added to their workload and created challenges in achieving the expected productivity gains. Not only is AI increasing the workloads of full-time employees, it’s hampering productivity and contributing to employee burnout.

English

English- •

- arstechnica.com

- •

- 3M

- •

It's sensible for businesses to shift from physical media sales. Per [CNBC's calculations](https://www.cnbc.com/2019/11/08/the-death-of-the-dvd-why-sales-dropped-more-than-86percent-in-13-years.html), DVD sales fell over 86 percent between 2008 and 2019. [Research from](https://www.motionpictures.org/wp-content/uploads/2022/03/MPA-2021-THEME-Report-FINAL.pdf) the Motion Picture Association in 2021 found that physical media represented 8 percent of the home/mobile entertainment market in the US, falling behind digital (80 percent) and theatrical (12 percent).

But as physical media gets less lucrative and the shuttering of businesses makes optical discs harder to find, the streaming services that largely replaced them are getting [aggravating and unreliable](https://arstechnica.com/gadgets/2024/05/all-the-ways-streaming-services-are-aggravating-their-subscribers-this-week/). And with the streaming industry becoming more competitive and profit-hungry than ever, you never know if the movie/show that most attracted you to a streaming service will still be available when you finally get a chance to sit down and watch. Even [paid-for online libraries](https://arstechnica.com/gadgets/2023/12/playstation-is-erasing-1318-seasons-of-discovery-shows-from-customer-libraries/) that were marketed as available "forever" have been [ripped away from customers](https://arstechnica.com/gadgets/2024/02/sony-claims-to-offer-subs-appropriate-value-for-deleting-digital-libraries/).

When someone buys or rents a DVD, they know exactly what content they're paying for and for how long they'll have it (assuming they take care of the physical media). They can also watch the content if the Internet goes out and be certain that they're getting uncompressed 4K resolution. DVD viewers are also less likely to be bombarded with [ads whenever they pause](https://arstechnica.com/gadgets/2024/05/prime-video-subs-will-soon-see-ads-for-amazon-products-when-they-hit-pause/) and can get around an [ad-riddled smart TV home screen](https://arstechnica.com/gadgets/2024/04/roku-ad-push-continues-with-plans-to-put-video-ads-in-os-home-screen/) (nothing's perfect; some DVDs have unskippable commercials).

English

English- •

- www.wired.com

- •

- 4M

- •

The music industry has officially declared war on Suno and Udio, two of the most prominent AI music generators. A group of music labels including Universal Music Group, Warner Music Group, and Sony Music Group has filed lawsuits in US federal court on Monday morning alleging copyright infringement on a “massive scale.”

The plaintiffs seek damages up to $150,000 per work infringed. The lawsuit against Suno is filed in Massachusetts, while the case against Udio’s parent company Uncharted Inc. was filed in New York. Suno and Udio did not immediately respond to a request to comment.

“Unlicensed services like Suno and Udio that claim it’s ‘fair’ to copy an artist’s life’s work and exploit it for their own profit without consent or pay set back the promise of genuinely innovative AI for us all,” Recording Industry Association of America chair and CEO Mitch Glazier said in a press release.

English

English- •

- www.wired.com

- •

- 4M

- •

A WIRED analysis and [one](https://rknight.me/blog/perplexity-ai-is-lying-about-its-user-agent/) carried out by developer Robb Knight suggest that Perplexity is able to achieve this partly through apparently ignoring a widely accepted web standard known as the Robots Exclusion Protocol to surreptitiously scrape areas of websites that operators do not want accessed by bots, despite [claiming](https://docs.perplexity.ai/docs/perplexitybot) that it won’t. WIRED observed a machine tied to Perplexity—more specifically, one on an Amazon server and almost certainly operated by Perplexity—doing this on wired.com and across other Condé Nast publications.

The WIRED analysis also demonstrates that despite [claims](https://www.perplexity.ai/hub/blog/perplexity-raises-series-b-funding-round) that Perplexity’s tools provide “instant, reliable answers to any question with complete sources and citations included,” doing away with the need to “click on different links,” its chatbot, which is capable of accurately summarizing journalistic work with appropriate credit, is also prone to [bullshitting](https://link.springer.com/article/10.1007/s10676-024-09775-5), in the technical sense of the word.

English

English- •

- www.bbc.com

- •

- 4M

- •

McDonald's is removing artificial intelligence (AI) powered ordering technology from its drive-through restaurants in the US, after customers shared its comical mishaps online.

A trial of the system, which was developed by IBM and uses voice recognition software to process orders, was announced in 2019.

It has not proved entirely reliable, however, resulting in viral videos of bizarre misinterpreted orders ranging from bacon-topped ice cream to hundreds of dollars' worth of chicken nuggets.

English

English- •

- www.bbc.com

- •

- 4M

- •

**If you're worried about how AI will affect your job, the world of copywriters may offer a glimpse of the future.**

Writer Benjamin Miller – not his real name – was thriving in early 2023. He led a team of more than 60 writers and editors, publishing blog posts and articles to promote a tech company that packages and resells data on everything from real estate to used cars. "It was really engaging work," Miller says, a chance to flex his creativity and collaborate with experts on a variety of subjects. But one day, Miller's manager told him about a new project. "They wanted to use AI to cut down on costs," he says. (Miller signed a non-disclosure agreement, and asked the BBC to withhold his and the company's name.)

A month later, the business introduced an automated system. Miller's manager would plug a headline for an article into an online form, an AI model would generate an outline based on that title, and Miller would get an alert on his computer. Instead of coming up with their own ideas, his writers would create articles around those outlines, and Miller would do a final edit before the stories were published. Miller only had a few months to adapt before he got news of a second layer of automation. Going forward, ChatGPT would write the articles in their entirety, and most of his team was fired. The few people remaining were left with an even less creative task: editing ChatGPT's subpar text to make it sound more human.

By 2024, the company laid off the rest of Miller's team, and he was alone. "All of a sudden I was just doing everyone's job," Miller says. Every day, he'd open the AI-written documents to fix the robot's formulaic mistakes, churning out the work that used to employ dozens of people.

English

English- •

- petapixel.com

- •

- 4M

- •

Adobe’s employees are typically of the same opinion of the company as its users, having internally already [expressed concern that AI could kill the jobs of their customers](https://petapixel.com/2023/07/31/adobe-staff-worry-their-ai-could-kill-the-jobs-of-their-own-customers/). That continued this week in internal discussions, where exasperated employees implored leadership to not let it be the “evil” company customers think it is.

This past week, Adobe became the subject of a public relations firestorm after [it pushed an update to its terms of service](https://petapixel.com/2024/06/06/photographers-outraged-by-adobes-new-privacy-and-content-terms/) that many users saw at best as overly aggressive and at worst as a rights grab. Adobe quickly [clarified it isn’t spying on users](https://petapixel.com/2024/06/07/adobe-responds-to-terms-of-use-controversy-says-it-isnt-spying-on-users/) and even promised to go back and [adjust its terms of service in response](https://petapixel.com/2024/06/10/adobe-revising-terms-of-use-to-clarify-content-licensing-ai-and-privacy/).

For many though, this was not enough, and online discourse surrounding Adobe continues to be mostly negative. According to internal Slack discussions [seen by *Business Insider*](https://www.businessinsider.com/adobe-employees-slam-company-over-ai-controversy-2024-6), as before, Adobe’s employees seem to be siding with users and are actively complaining about Adobe’s poor communications and inability to learn from past mistakes.

English

English- •

- www.theverge.com

- •

- 4M

- •

Meta is putting plans for its [AI assistant](https://www.theverge.com/2024/4/18/24133808/meta-ai-assistant-llama-3-chatgpt-openai-rival) on hold in Europe after receiving objections from Ireland’s privacy regulator, the company [announced on Friday](https://about.fb.com/news/2024/06/building-ai-technology-for-europeans-in-a-transparent-and-responsible-way/).

In a blog post, Meta said the Irish Data Protection Commission (DPC) asked the company to delay training its large language models on content that had been publicly posted to Facebook and Instagram profiles.

Meta said it is “disappointed” by the request, “particularly since we incorporated regulatory feedback and the European \[Data Protection Authorities] have been informed since March.”** **[Per the *Irish Independent*](https://www.independent.ie/business/technology/meta-pauses-ai-data-collection-in-eu-following-irish-dpc-request/a2000443736.html), Meta had recently begun notifying European users that it would collect their data and offered an opt-out option in an attempt to comply with European privacy laws.

English

English- •

- appleinsider.com

- •

- 4M

- •

A British man is ridiculously attempting to sue Apple following a divorce, caused by his wife finding messages to a prostitute he deleted from his [iPhone](https://appleinsider.com/inside/iphone "iPhone") that were still accessible on an iMac.

In the last years of his marriage, a man referred to as "Richard" started to use the services of prostitutes, without his wife's knowledge. To try and keep the communications secret, he used iMessages on his iPhone, but then deleted the messages.

Despite being careful on his iPhone to cover his tracks, he didn't count on Apple's ecosystem automatically synchronizing his messaging history with the family [iMac](https://appleinsider.com/inside/imac "iMac"). Apparently, he wasn't careful enough to use Family Sharing for iCloud, or discrete user accounts on the Mac.

*The Times* [reports](https://www.thetimes.com/article/husband-pursues-apple-after-wife-finds-deleted-messages-to-prostitute-bbhlg2x07) the wife saw the message when she opened [iMessage](https://appleinsider.com/inside/imessage "iMessage") on the iMac. She also saw years of messages to prostitutes, revealing a long period of infidelity by her husband.

English

English- •

- arstechnica.com

- •

- 4M

- •

Microsoft is pivoting its company culture to make security a top priority, President Brad Smith testified to Congress on Thursday, promising that security will be "more important even than the company’s work on artificial intelligence."

Satya Nadella, Microsoft's CEO, "has taken on the responsibility personally to serve as the senior executive with overall accountability for Microsoft’s security," Smith told Congress.

His testimony comes after Microsoft admitted that it could have taken steps to prevent two aggressive nation-state cyberattacks from [China](https://arstechnica.com/security/2023/09/hack-of-a-microsoft-corporate-account-led-to-azure-breach-by-chinese-hackers/) and [Russia](https://arstechnica.com/tech-policy/2021/04/us-government-strikes-back-at-kremlin-for-solarwinds-hack-campaign/).

According to Microsoft whistleblower Andrew Harris, Microsoft spent years ignoring a vulnerability while he proposed fixes to the "security nightmare." Instead, Microsoft feared it might lose its government contract by warning about the bug and allegedly downplayed the problem, choosing profits over security, ProPublica [reported](https://www.propublica.org/article/microsoft-solarwinds-golden-saml-data-breach-russian-hackers).

This apparent negligence led to [one of the largest cyberattacks in US history,](https://arstechnica.com/security/2023/08/microsoft-cloud-security-blasted-for-its-culture-of-toxic-obfuscation/) and officials' sensitive data was compromised due to Microsoft's security failures. The China-linked hackers stole 60,000 US State Department emails, Reuters [reported](https://www.reuters.com/technology/microsoft-president-testify-before-house-panel-over-security-lapses-2024-06-13/). And several federal agencies were hit, giving attackers access to sensitive government information, including data from the National Nuclear Security Administration and the National Institutes of Health, ProPublica reported. Even Microsoft itself was breached, with a Russian group accessing senior staff emails this year, including their "correspondence with government officials," Reuters reported.

English

English- •

- www.cnn.com

- •

- 4M

- •

Lawyers for Elon Musk on Tuesday moved to dismiss the billionaire’s [lawsuit against OpenAI](https://www.cnn.com/2024/03/01/tech/elon-musk-lawsuit-openai-sam-altman/index.html) and CEO Sam Altman, ending a months-long legal battle between co-founders of the artificial intelligence startup.

Musk — who co-founded OpenAI in 2015 — sued the company in March, accusing the ChatGPT maker of abandoning its original, nonprofit mission by reserving some of its most advanced AI technology for private customers. The lawsuit had sought a jury trial and for the company, Altman and co-founder and president Greg Brockman to pay back any profit they received from the business.

English

English- •

- www.404media.co

- •

- 4M

- •

A group of hackers that says it believes “AI-generated artwork is detrimental to the creative industry and should be discouraged” is hacking people who are trying to use a popular interface for the AI image generation software Stable Diffusion with a malicious extension for the image generator interface shared on Github.

ComfyUI is an extremely popular graphical user interface for Stable Diffusion that’s shared freely on Github, making it easier for users to generate images and modify their image generation models. ComfyUI\_LLMVISION, the extension that was compromised to hack users, is a ComfyUI extension that allowed users to integrate large language models GPT-4 and Claude 3 into the same interface.

The ComfyUI\_LLMVISION Github page is currently down, but a Wayback Machine archive of it from June 9 states that it was “COMPROMISED BY NULLBULGE GROUP.”

creator

to

Right now, it’s all being funded by one person, Zhang Jingna (a photographer that recently sued and won her case when someone plagiarized her work) but it’s grown so quickly she got hit with a $96K bill for one month.

creator

to

If they do, it’s going to be a bad time for them, since Cara has Glaze integration and encourages everyone to use it. https://blog.cara.app/blog/cara-glaze-about

English

English- •

- www.wired.com

- •

- 4M

- •

When Microsoft CEO [Satya Nadella](https://www.wired.com/story/microsofts-satya-nadella-is-betting-everything-on-ai/) revealed the new Windows AI tool that can answer questions about your web browsing and laptop use, he said one of the [“magical” things](https://youtu.be/uHEPBzYick0?si=qxga6HpbXrCLGQvt\&t=257) about it was that the data doesn’t leave your laptop; the [Windows Recall system](https://www.wired.com/story/microsoft-recall-alternatives/) takes screenshots of your activity every five seconds and saves them on the device. But security experts say that data may not stay there for long.

Two weeks ahead of [Recall’s launch on new Copilot+ PCs on June 18](https://www.wired.com/story/everything-announced-microsoft-surface-event-2024/), security researchers have demonstrated how preview versions of the tool store the screenshots in an unencrypted database. The researchers say the data could easily be hoovered up by an attacker. And now, in a warning about how Recall could be abused by criminal hackers, Alex Hagenah, a cybersecurity strategist and ethical hacker, has released a demo tool that can automatically extract and display everything Recall records on a laptop.

Dubbed [TotalRecall](https://github.com/xaitax/TotalRecall)—yes, after the 1990 sci-fi film—the tool can pull all the information that Recall saves into its main database on a Windows laptop. “The database is unencrypted. It’s all plain text,” Hagenah says. Since Microsoft revealed Recall in mid-May, security researchers have repeatedly compared it to [spyware or stalkerware](https://www.wired.com/story/how-to-check-for-stalkerware/) that can track everything you do on your device. “It’s a Trojan 2.0 really, built in,” Hagenah says, adding that he built TotalRecall—which he’s releasing on GitHub—in order to show what is possible and to encourage Microsoft to make changes before Recall fully launches.

English

English- •

- techcrunch.com

- •

- 4M

- •

Artists have finally had enough with Meta’s predatory AI policies, but Meta’s loss is Cara’s gain. An artist-run, anti-AI social platform, [Cara](https://cara.app/) has grown from 40,000 to 650,000 users within the last week, catapulting it to the top of the App Store charts.

Instagram is a necessity for many artists, who use the platform to promote their work and solicit paying clients. But Meta is using public posts to [train its generative AI](https://www.cnet.com/tech/services-and-software/how-to-opt-out-of-instagram-and-facebook-using-your-posts-for-ai/) systems, and only [European users](https://about.fb.com/news/h/bringing-generative-ai-experiences-to-people-in-europe/) can opt out, since they’re protected by [GDPR](https://techcrunch.com/2024/04/15/consent-or-pay-open-letter-edpb/) laws. Generative AI has become so front-and-center on Meta’s apps that artists reached their breaking point

English

English- •

- www.forbes.com

- •

- 4M

- •

Google’s chief privacy officer, Keith Enright, will depart the tech giant after 13 years, with no plans yet to replace him, as the company restructures its teams in charge of privacy and legal compliance.

Staff were informed of Enright’s departure in mid-May, according to two sources with knowledge of the matter. One told *Forbes* the news came as a shock to employees, as Enright was well-liked and respected, having steered Google’s privacy team through years in which its data handling practices were held under a microscope by lawmakers, regulators and civil courts.

Matthew Bye, Google’s head of competition law, will be leaving as well, after 15 years with the company and during a critical moment for Google when it comes to antitrust. Last month, the company wrapped up closing arguments in a [landmark competition trial](https://www.forbes.com/sites/richardnieva/2023/09/11/google-antitrust-trail-25th-birthday/?sh=7e2fdf4110e4 "https://www.forbes.com/sites/richardnieva/2023/09/11/google-antitrust-trail-25th-birthday/?sh=7e2fdf4110e4")brought on by the Department of Justice, over Google’s contracts with device manufacturers that push users to Google search. Bye did not respond to a request for comment.

English

English- •

- doublepulsar.com

- •

- 4M

- •

**Q. Is this really as harmful as you think?**

A. Go to your parents house, your grandparents house etc and look at their Windows PC, look at the installed software in the past year, and try to use the device. Run some antivirus scans. There’s no way this implementation doesn’t end in tears — there’s a reason there’s a trillion dollar security industry, and that most problems revolve around malware and endpoints.

English

English- •

- www.extremetech.com

- •

- 4M

- •

Boeing and NASA are moving ahead with the upcoming Starliner demonstration launch despite an active helium leak. The launch is now on the books for Saturday, June 1, at 12:25 p.m. EDT. If all goes as planned, Starliner will rendezvous with the International Space Station the following day and return to Earth on June 10. If not, it will be another embarrassing setback for Boeing's troubled spacecraft.

Technicians [discovered helium escaping](https://www.extremetech.com/aerospace/nasa-scrubs-starliners-may-25-launch-attempt) from the fuel system earlier this month after valve issues [caused NASA to halt the last launch attempt](https://www.extremetech.com/aerospace/boeing-starliner-launch-halted-because-of-buzzing-rocket). NASA said in a recent news conference that the extremely slow leak does not pose a danger to the spacecraft. This is based on an exhaustive inspection of Starliner, which was removed from the launchpad along with the Atlas V so the valve in ULA's rocket could be swapped. It was then that the team noticed the helium leak in Starliner.

Even though the optics of launching with an active leak aren't ideal for the troubled Boeing craft, NASA says engineers have done their due diligence during this multi-week pause. Every space launch has risk tolerances, and NASA has judged this one to be in bounds. Helium is a tiny atom that is difficult to fully contain—even the highly successful SpaceX Dragon has occasionally flown with small leaks. While helium is part of the propulsion system, this inert gas is not used as fuel. Helium is used to pressurize the system and ensure fuel is available to the spacecraft's four "doghouse" thruster assemblies and the launch abort engines.

English

English- •

- mainichi.jp

- •

- 5M

- •

TOKYO -- A 25-year-old man has been served a fresh arrest warrant for allegedly creating a computer virus using generative artificial intelligence (AI), the Metropolitan Police Department (MPD)'s cybercrime control division announced on May 28, in what is believed to be the first such case in Japan.

Ryuki Hayashi, an unemployed resident of the Kanagawa Prefecture city of Kawasaki, was served the warrant on suspicion of making electronic or magnetic records containing unauthorized commands.

Hayashi is accused of creating a virus similar to ransomware, which destroys computer data and demands ransom in cryptocurrency, using his home computer and smartphone on March 31, 2023. He has reportedly admitted to the allegations, telling police, "I thought I could do anything by asking AI. I wanted to make easy money."

English

English- •

- futurism.com

- •

- 5M

- •

You know how Google's new feature called AI Overviews is prone to spitting out [wildly incorrect answers to search queries](https://www.cnn.com/2024/05/24/tech/google-search-ai-results-incorrect-fix/index.html)? In one instance, AI Overviews told a user [to use glue on pizza](https://futurism.com/the-byte/googles-ai-glue-on-pizza-flaw) to make sure the cheese won't slide off (*pssst*...please don't do this.)

Well, according to an [interview at *The Verge*](https://www.theverge.com/24158374/google-ceo-sundar-pichai-ai-search-gemini-future-of-the-internet-web-openai-decoder-interview)with Google CEO Sundar Pichai published earlier this week, just before criticism of the outputs really took off, these "[hallucinations](https://www.ibm.com/topics/ai-hallucinations)" are an "inherent feature" of AI large language models (LLM), which is what drives AI Overviews, and this feature "is still an unsolved problem."

English

English- •

- www.404media.co

- •

- 5M

- •

In exchange for selling them repair parts, Samsung requires independent repair shops to give Samsung the name, contact information, phone identifier, and customer complaint details of everyone who gets their phone repaired at these shops, according to a contract obtained by 404 Media. Stunningly, it also requires these nominally independent shops to “immediately disassemble” any phones that customers have brought them that have been previously repaired with aftermarket or third-party parts and to “immediately notify” Samsung that the customer has used third-party parts.

Respectfully requesting that in the future, you read articles before replying.

And:

This is all over a battery in a watch.